TL;DR

Systems are becoming more and more autonomous. Recruiters worry about losing authority, managers worry about losing accountability, and candidates worry about being reduced to deprioritised data on recruiters’ screens. These reactions are all predictable and validly human.

When AI actually starts acting on certain decisions instead of just predicting the need and suggesting them, discomfort increases. But this is something that only better coding can fix. It's also the intent of design. Large Language models are getting better at incorporating trust in design since they have realised that that’s the fastest way to expand and learn.

Globally, it’s seen that recruiters adopt AI only when it is transparent, understandable, customizable, and clearly positioned as an assistant rather than a replacement. They trust it when it strengthens their judgment instead of overriding it.

Candidates, too, accept it when it feels fair and accountable. Hence, it is trust that determines the speed of adoption, not cost nor features.

When designed well, agentic AI solutions bridge the gap between risky and reliable.

Why Trust Is A Real AI Hiring Problem

Most conversations about AI in hiring still get stuck on its features and what it can do for you. Can it screen faster? Can it rank candidates with more insightful inferences? Can it automate interviews or schedule hassle-free?

All of that matters. But in the end, it is trust (or lack thereof) that is the real deal-breaker when you zoom out, not the agentic AI’s capabilities.

Ceding control to a system that recruiters cannot fully control or see is their biggest worry. Hiring managers worry that they will have to bear the brunt of criticism if models hallucinate or go wrong. Candidates are concerned that they will be reduced to data points in a system that quietly filters them out without a fair and just ‘trial’ where they can have their say.

In other words, the psychology of trust is now just as important as the implementation of the agentic AI solutions.

Agentic AI can plan, decide, and act with much less human prompting since it is designed to be a more autonomous setup, rather than just a simple AI-prompted tool. Hence, trust becomes even more indispensable.

If we ignore the human side, even the most powerful agentic AI solutions will sit on the shelf.

What Agentic AI Actually Is

Before we get too deep into the psychology of behavior and perception, it helps to define what Agentic AI solutions are about.

Traditional AI in HR has mostly been reactive. It scores, suggests, recommends, or predicts when a recruiter has constrained it to.

Agentic AI is a step beyond that. It refers to systems that can pursue goals more autonomously. Instead of simply providing a score, they can orchestrate a sequence of actions across tools and workflows to move a hiring process forward with limited human intervention.

In a recruiting context, that could mean:

- Monitoring incoming applications and automatically shortlisting likely candidates

- Triggering automatic structured video interviews for pre-qualified candidates

- Following up with nudges or reminders when someone stalls

- Flagging risk patterns to a recruiter before a decision is even made

Sounds ideal? It does, but in practice, the more independent the system feels, the more it runs into a very human problem.

Though they don’t like to admit it, people do not like giving up control.

Why Humans Naturally Resist Algorithms

There is a new term that behavioral scientists have come up with for human resistance to automated decision systems like agentic AI - algorithm aversion. It is seen in research that people tend to lose a lot of confidence in them after seeing them make the smallest of mistakes. Call it judgmental, but humans will always prefer a human decision maker over an algorithm, even though the latter is much more accurate overall and even helps reduce inherent human biases.

In the context of hiring, experiments have found that both workers and managers often avoid having an algorithm make the final hiring choice, even when doing so is not in their own best interest.

Why does that happen?

A few psychological triggers show up again and again:

- Illusion of control: We are all more comfortable with human error than machine error as we feel that humans can be reasoned with better.

- Loss of agency: If an HR recruiter has built years of expertise in their field, they can feel demoted if the final decision-making is left up to an AI agent.

- Black box discomfort: If the system fails to elucidate its decisions, human recruiters may assume the worst, which is especially detrimental as it can affect careers and thereby, livelihoods

- Status and identity: For many HR and TA professionals, judgment is part of their professional identity. A highly autonomous Agentic AI solution can feel like a threat.

When you consider these psychological factors clashing with more autonomous agentic AI solutions, you can hazard a guess at what is in store. The more powerful the system seems, the more people are quietly prepared to combat it.

How Distrust Shows Up In Real Hiring Decisions

Distrust in Agentic AI need not always look like outright rejection.

Most of the time, it shows up in subtle behavior patterns such as:

- Recruiters overriding shortlists made by AI, as the candidate “feels right” to them

- Managers in recruiting are advising on more manual checks “just to be safe.”

- AI tools that are budgeted for having low adoption rates during routine audits conducted to see tech implementation.

- HR teams keep manual Excel sheets as “backup” in case AI fails.

Even when AI-driven recommendations improve resume screening performance, as seen at Hyring itself, recruiters do not always follow them, research has shown. They often outrightly discount or at least partially ignore the agentic AI advice, especially when it clashes with their own initial intuition, which has been earlier proven to be riddled with biases.

In a world moving toward more agentic AI, this tension becomes more pronounced. If a large part of the funnel is capable of being automated by agentic AI solutions and humans in the loop do not fully trust it, you get not transformation but friction.

The Candidate Side Of The Trust Gap

If you thought trust is only something of an internal concern that a company and its employees face, think again. Candidates are forming their own views about AI in hiring. They don’t seem convinced yet, as feedback is generally mixed.

AI tools are perceived as useful and efficient, particularly for early screening, when they are framed clearly and transparently.

A recent survey, however, from Gartner found that only about 26 percent of job candidates trust AI to evaluate them fairly. This is when more than 50 per cent of them already assume that AI is doing their preliminary screenings.

That is a significant trust gap.

From a candidate's perspective, common fears include:

- Being unfairly filtered out due to hallucinations in the data

- No clarity on the reason for rejection

- Biases in the data will quickly catch up to them, overlooking the human element.

- Feeling dehumanised by the lack of human interactions and decision-making

If your talent brand is built around empathy and inclusion, you cannot afford to ignore those perceptions.

The more you lean into agentic AI to be integrated into your hiring stack, the more important it becomes to show candidates that the system is accountable. Also important is to convince them that the system is human-supervised and aligned with the values that they expect and appreciate.

The Recruiter And Hiring Manager Side

Internally, the psychology of trust looks and works a bit differently.

Recruiters are not against technology at all. Most are drowning in work and are ecstatic to use automation for boring and mundane tasks. What they are really against is the way automation sidelines their own expertise and that quietly shows.

These are some ways they respond:

- Fear of being replaced instead of being supported

If the narrative is being set that agentic AI solutions will “take care of hiring”, recruiters will understandably and vehemently push back.

- Skepticism about quality

If the ranking and screening of candidates isn’t transparent, then even the smallest of suggestions that are out of line or strange, can become evidence that it cannot be fully trusted.

- Compliance anxiety

Regulations like the EU AI Act explicitly label employment related to AI as high risk. HR leaders know they will be held accountable if something goes wrong, and that piles on the anxiety.

Under these restrictions, defaulting to human intelligence feels like a much safer and less risky choice, notwithstanding the data that backs agentic AI.

This is why education, involvement, and transparency matter just as much as the underlying model performance when you introduce more agentic AI solutions.

Also Read: AI Hiring Compliance: How to Stay Legal Without Slowing Down

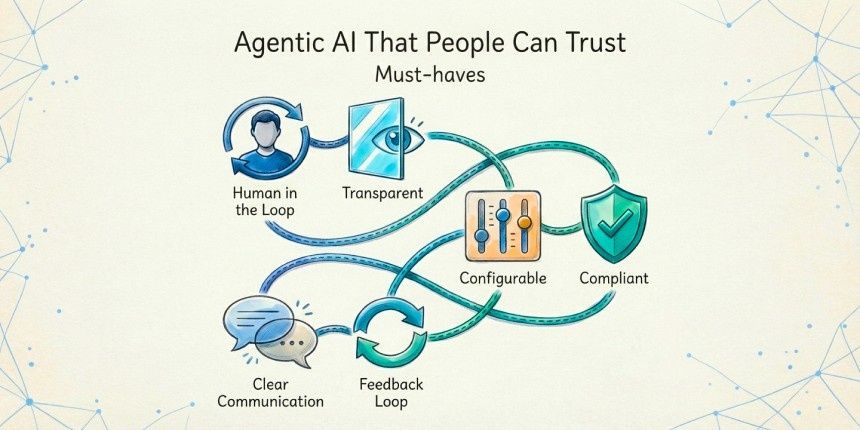

Designing Agentic AI Solutions People Can Trust

You cannot talk people into trusting a system. You have to design for trust.

For agentic AI in hiring, that means incorporating a few very practical choices.

1. Human-in-the-loop by design

Make it clear where AI comes in and where humans have the final say. Agentic AI solutions should elevate recruiters, not override them.

2. Transparent criteria, not random scores

Show which skills, experiences or behaviors led to a recommendation. Even high level explanations build confidence over a black box rating.

3. Configurable autonomy

Let teams confabulate and adjust the level of automation. Start with agentic AI handling low-risk tasks like reminders or basic screening, then expand as the trust grows.

4. Regulatory awareness baked in

For high-risk use cases like employment, explicitly align design with emerging regulations. Document data sources, testing, bias checks, and monitoring.

5. Clear candidate communication

Tell candidates when AI is used, what it does, and how human reviewers stay in control. Simple explanations go a long way in closing the trust gap.

6. Feedback loops for recruiters and candidates

Make it easy for people to flag results that are out of the ordinary or what is expected, challenge decisions, or provide qualitative feedback. A system that learns after listening is a system that is more trustworthy.

Well-designed agentic workflows like Hyring’s do not feel like a loss of control. They feel like a partnership, where AI handles the volume and the weight of analyzing millions of patterns so that humans can focus on judgment, empathy, and emotional engagement.

Bringing It All Together

The question in the industry today is not "Can Agentic AI help us hire better?"

We already know it can. The data is clear. The harder question is "Will people trust the way we use it?"

As hiring tech shifts from simple, reactive recommendation engines to more agentic AI solutions that can plan and act across the funnel, the trust stakes go higher.

Candidates want to know they are being judged fairly. Recruiters want to know that they are still the experts in the room. Leaders want to know whether the systems they endorse and heavily invest in will stand up to ethical and legal scrutiny.

If we take the psychology of trust seriously and design agentic AI solutions that are transparent, accountable, and human-centric, AI will not feel like a threat, as it is being made out to be.

It will feel like what it should have all along - a smarter friend that helps you hire better.

FAQs

1. Why do experienced recruiters still hesitate to trust agentic AI?

That’s because it feels like they are giving up control. When systems start acting independently, even confident professionals worry about being sidelined or second-guessed by software.

2. Does more automation automatically mean less human control?

Well-designed systems let you decide how much autonomy AI gets. You can keep humans in the loop while still benefiting from speed and scale. So No, it doesn’t mean relinquishing control.

3. Is distrust in AI really emotional, not technical?

People reject systems not because they perform poorly, but because they feel invisible or powerless. Trust fails when explanations fail, and hence, mostly, yes, it is a game of emotions.

4. Can agentic AI ever earn trust in hiring?

Yes, but only when it is transparent, clearly supervised by humans, and designed to assist rather than override judgment. Autonomy without accountability never works.

5. What is one sign an AI hiring system will struggle with adoption?

When users quietly double-check everything, it suggests high levels of mistrust. Low adoption is usually a trust problem, not a technology problem.